SGE/AI Overview Tracking Cadence: Weekly Rituals

As artificial intelligence continues its pivotal transformation across industries, keeping track of AI systems’ progress and Search Generative Experience (SGE) strategies is more crucial than ever. Organizations are now embracing structured weekly rituals to monitor, calibrate, and improve the performance and alignment of AI models as well as user-facing generative features. Weekly tracking cadences provide a methodical approach for assessing AI outcomes, understanding user engagement with generative interfaces, and making iterative enhancements that move systems closer to long-term goals.

What is SGE/AI Weekly Tracking?

Table of Contents

SGE/AI overview tracking cadence refers to the routinely scheduled evaluations and discussions that focus on assessing performance, identifying trends, and aligning stakeholders on immediate priorities and roadblocks. These rituals serve as both retrospective and forward-thinking check-ins to actively manage AI features — particularly Search Generative Experience elements — and support ongoing development with an agile mindset.

In environments where search intelligence and generative AI coexist, such weekly evaluations are invaluable. They shed light on what’s working, what needs improvement, and what user data reveals about context-driven content exposure.

Why Weekly Rituals Matter

Moving from monthly or quarterly AI updates to a weekly cadence introduces significant benefits for tech teams, product leads, and data operations. Here’s why this rhythm matters:

- Faster detection of performance regressions: Weekly reviews allow teams to catch bugs, drops in accuracy, or unexpected drift before those issues become widespread.

- Closer alignment between data and product teams: Regular meetings encourage continual communication and a shared understanding of performance targets.

- Agility in experimentation: Weekly cadences foster a test-and-learn culture where quick wins and learnings can be deployed iteratively.

- More granular trend spotting: Teams can monitor shifts in user behavior, engagement with SGE features, or changes in prompt quality related to generative AI use.

When executed effectively, these rituals evolve into the operational heartbeat of AI-driven teams, ensuring that every step taken is grounded in current performance DNA and end-user feedback.

Core Components of a Weekly SGE/AI Review

A typical weekly AI/SGE tracking ritual should follow a consistent, modular agenda. It may involve team-wide syncs or focused sessions with smaller stakeholders. Below are the key sections that make up an effective session:

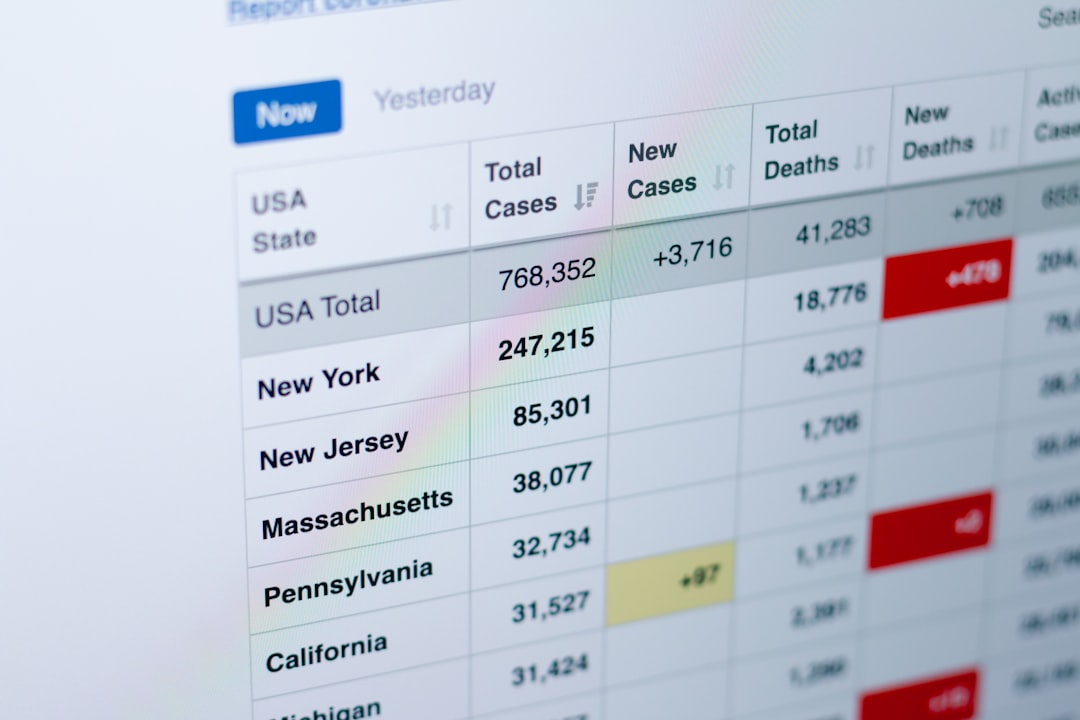

- Review of Weekly Metrics: This includes traffic to SGE features, AI response rate benchmarks, model quality KPIs, and latency numbers. Often displayed as visual dashboards to provide instant clarity.

- Incident Recap: A recap of any outages, bugs, or false positives in detection or generation workflows, including their fixes and learnings.

- Experiment Analysis: Results from A/B tests on prompt phrasing, output formatting, or model tuning. Also includes learnings from canceled or inconclusive tests.

- User Feedback Themes: This segment draws insights from direct user surveys, platform usage logs, or social listening to identify sentiment trends.

- Action Planning: A list of short-term priorities or adjustment areas for teams handling prompt optimization, retrieval tuning, or UI changes.

Best Practices for Running Weekly Rituals

While the format and content of weekly rituals may evolve with scale, some best practices help ensure their effectiveness from the beginning:

- Keep it Time-Bound: Limit meetings to 45–60 minutes. This encourages clarity and preparation in advance.

- Leverage Visual Aids: Dashboards and metric snapshots should be screen-shared or circulated to avoid time-consuming data exploration during the session.

- Start and End with Key Highlights: Keep the focus sharp by framing the session with one major win and one major challenge.

- Assign Action Ownership Publicly: Make it clear who is tasked with follow-ups. This reinforces accountability and ensures continuous momentum.

- Record and Summarize: Document the weekly meeting in a succinct email or shared doc to allow absentees to catch up and enable continuity.

Role of Cross-functional Teams

SGE and AI tracking depends on buy-in and involvement from a diverse set of team members. These may include:

- Machine Learning Engineers: Provide insights on model behavior, retraining cycles, and latency improvements.

- Product Managers: Correlate AI performance data with user experiences and roadmap priorities.

- Data Analysts: Spot statistical anomalies and derive actionable insights through trend monitoring.

- UX/Content Designers: Evaluate generated output readability and contextual suitability.

When multiple voices contribute to the same ritual, blind spots are minimized and a more holistic picture emerges — vital for managing complex systems like real-time generative search engines.

Leveraging Tools and Automation

Modern teams benefit greatly from integrating automation into their weekly tracking cadence. Some of the most commonly used tools include:

- BI Dashboards (Looker, Tableau, Power BI): For automated access to updated performance numbers.

- Real-time Monitoring Tools (Datadog, Grafana): Visualizing latency, API error trends, and backend load.

- Issue Trackers (Jira, Linear): Providing continuity in task execution and linking insights to epics or bugs.

- Collaboration Spaces (Notion, Confluence): Keeping summaries and archives of previous weekly rituals.

How This Fits Into Broader AI Governance

Weekly rituals aren’t just about metrics — they’re foundational to responsible AI development. Tracking systems at this frequency introduces a layer of oversight that helps maintain transparency, user alignment, and ethical model tuning.

In regulated or high-risk industries like finance, healthcare, or education, weekly oversight also contributes to compliance checks, early detection of bias, and audit readiness. As such, many governance teams leverage these rituals as primary documentation tools.

Conclusion

The path to trustable, scalable AI starts with consistency. Weekly tracking rituals provide this through measurable accountability, continuous learning, and well-aligned cross-team coordination. While they may seem operational, their strategic significance is impossible to overstate in today’s hyper-dynamic AI landscape.

Frequently Asked Questions (FAQ)

-

Q: How long should a typical weekly AI review last?

A: Ideally, between 45 to 60 minutes, with follow-ups delegated asynchronously. -

Q: Who should attend these weekly rituals?

A: Key stakeholders from data science, product, engineering, and UX teams. -

Q: What metrics are most important to track weekly?

A: Model accuracy, latency, output quality, user engagement rates, and error frequencies. -

Q: Can these rituals be done remotely or asynchronously?

A: Yes. Many teams leverage recordings, shared documents, and written recaps to support hybrid or async collaboration. -

Q: How do weekly reviews support long-term AI development?

A: Weekly insights compound over time to offer trend data, track improvements, and inform retrospective decisions in quarterly planning.