How to Run AI Models Locally on Windows Without Internet

With the proliferation of powerful artificial intelligence (AI) tools, more developers and enthusiasts are exploring the possibility of running AI models locally on their machines. This not only provides better privacy but also reduces latency and circumvents the need for a constant internet connection. Running AI models offline on a Windows system is entirely possible and, with the right tools and setup, can be smooth and effective.

In this article, we will explore the steps involved in running AI models locally on Windows, the benefits and challenges, and the tools required to get started. Whether you’re interested in natural language processing, image recognition, or data inference, this guide lays a strong foundation for offline AI development.

Why Run AI Models Locally?

Table of Contents

Running AI models locally brings a range of advantages compared to cloud-based solutions:

- Privacy: Your data remains entirely on your machine, reducing risks of exposure or breaches.

- Speed: Data doesn’t need to be uploaded or downloaded, which lowers latency significantly.

- Reliability: No dependency on network stability or server uptime.

- Cost-efficient: Avoid premium cloud compute fees, especially during long or frequent inference sessions.

However, running AI locally comes with the trade-off of higher hardware requirements and a need for careful configuration. Luckily, many modern laptops and desktops with high-performance CPUs and GPUs are up to the task.

Prerequisites

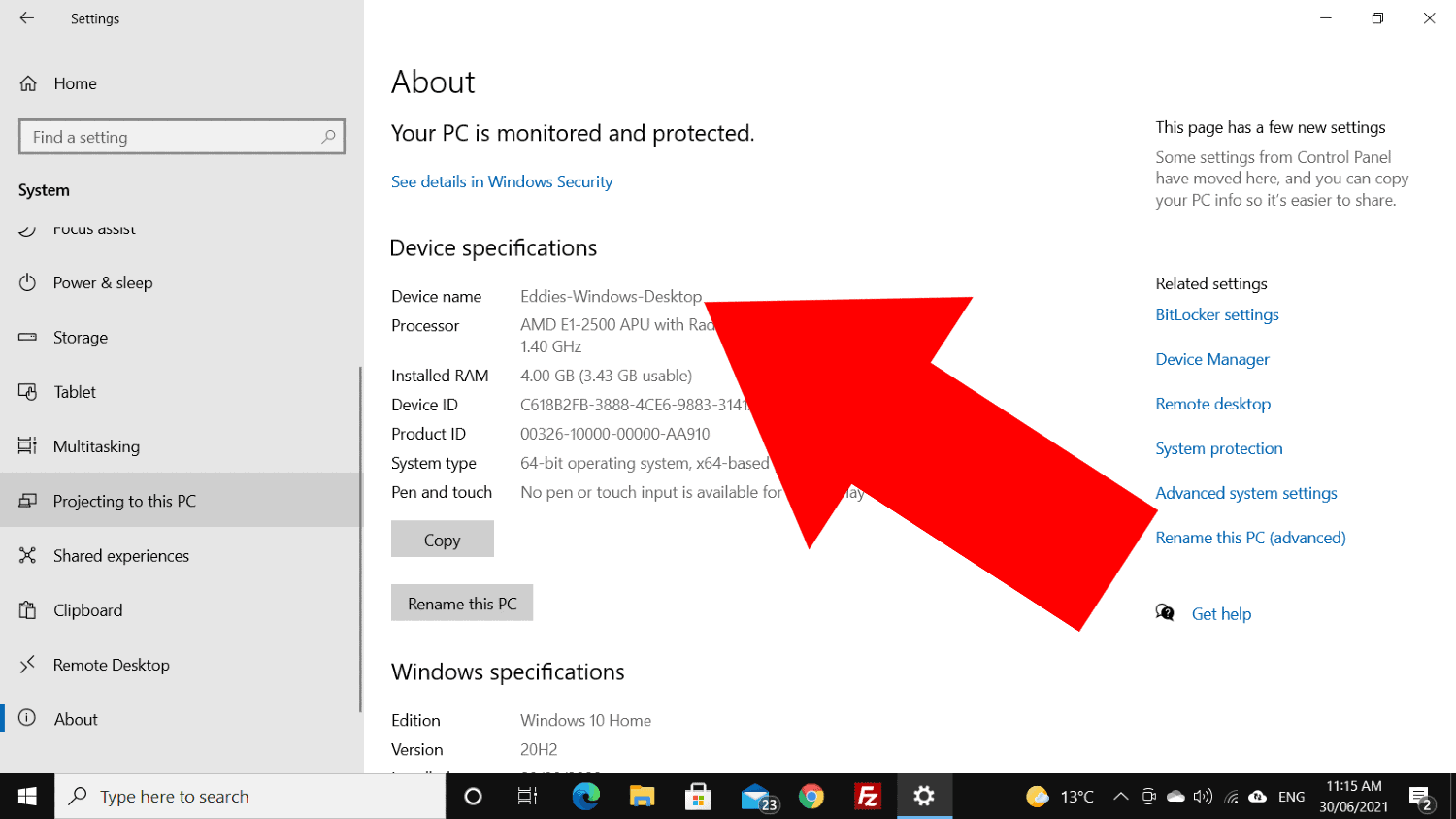

Before setting up a local AI environment on Windows, ensure your system has the following specifications:

- Operating System: Windows 10 (64-bit) or later

- Processor: At least a Quad-Core CPU (i7 or Ryzen 7 recommended)

- Memory: Minimum 16GB RAM

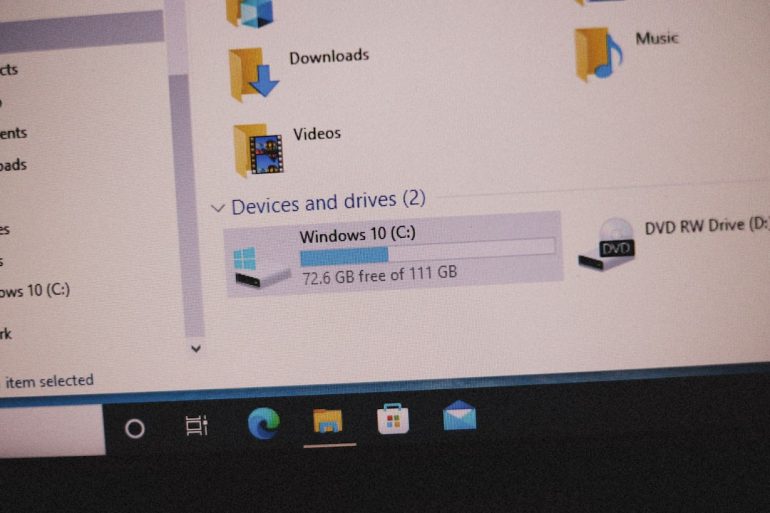

- Storage: At least 50GB of free SSD space

- GPU: Dedicated GPU (NVIDIA RTX 2060 or better preferred for acceleration)

Additionally, install the following software components:

- Python: Install a Python 3.x version via the official site

- Visual Studio Build Tools: Required for compiling dependencies

- CUDA and cuDNN: For NVIDIA GPU support (optional but highly recommended)

Popular AI Frameworks That Support Local Inference

Several open-source AI frameworks can be installed on Windows and support complete offline functionality:

- PyTorch: A versatile and widely-used deep learning framework. Pip-installable with GPU support.

- TensorFlow: Google’s popular AI library. It also comes with offline documentation and tools.

- ONNX Runtime: Open Neural Network Exchange is optimized for fast inference and supports models from many frameworks.

- Transformers by Hugging Face: While it often fetches models from the internet, you can download them once and use them offline.

Steps to Run Your AI Model Locally

Here’s a basic roadmap to get your AI model inference pipeline running entirely offline:

- Install Dependencies: Use pip to install your preferred AI library and additional tools like NumPy, SciPy, etc.

- Download Models: Download models while online and save them locally (e.g., using Hugging Face’s from_pretrained with cache_dir).

- Use Local Source: Point your inference scripts to the local file path of the model.

- Set Environment Variables: Ensure CUDA and Python paths are recognized by the system.

- Run the Inference: Load inputs, process with the model, and observe outputs.

Here’s a simple code example using PyTorch:

import torch

from torchvision import models

model = models.resnet18(pretrained=False)

# Load locally saved weights

model.load_state_dict(torch.load("resnet18_weights.pth"))

model.eval()

# Run inference

input_tensor = torch.rand(1, 3, 224, 224)

output = model(input_tensor)

print(output)

Challenges and Solutions

While empowering, running AI offline poses some challenges:

- Large Model Sizes: Consider quantized or distilled versions of models for performance improvements.

- Updating Models: Offline setups require manual updates via pre-downloading models.

- Dependency Conflicts: Isolate environments using virtualenv or conda to prevent conflicts.

Conclusion

Running AI models locally on Windows without an internet connection is absolutely feasible and comes with numerous benefits, especially for projects where privacy and autonomy are paramount. By using powerful frameworks like PyTorch and TensorFlow in combination with local resources, you can deploy, test, and utilize machine learning models completely offline.

For optimal success, it’s recommended to keep your dependencies well-documented and update your toolset whenever you’re briefly online. This ensures that your offline environment remains secure, efficient, and future-proof.

With the right preparation, the power of AI is truly at your fingertips—no cloud required.