Flare of Denial: Cloudflare Outage Analysis

In the early hours of a Wednesday morning in mid-2024, millions of users across the internet experienced a digital short-circuit. Websites failed to load, online services were rendered inaccessible, and a sense of frustration gripped users worldwide. The culprit? A widespread and significant outage at Cloudflare, the internet’s leading content delivery network (CDN) and DDoS mitigation provider. Dubbed the “Flare of Denial” by affected communities, this incident serves as a stark reminder of our increasing dependency on a handful of infrastructural giants to keep the web functional.

TL;DR

Table of Contents

Cloudflare experienced a major outage in mid-2024, affecting millions of websites and services globally. The root cause was a configuration error during a routine network upgrade, which cascaded into a critical failure of their edge routing infrastructure. While service was restored within hours, the outage reignited debates about centralized internet services and the risks they pose. It also prompted Cloudflare to release detailed post-incident reviews and promises of infrastructural overhauls.

Understanding Cloudflare’s Role

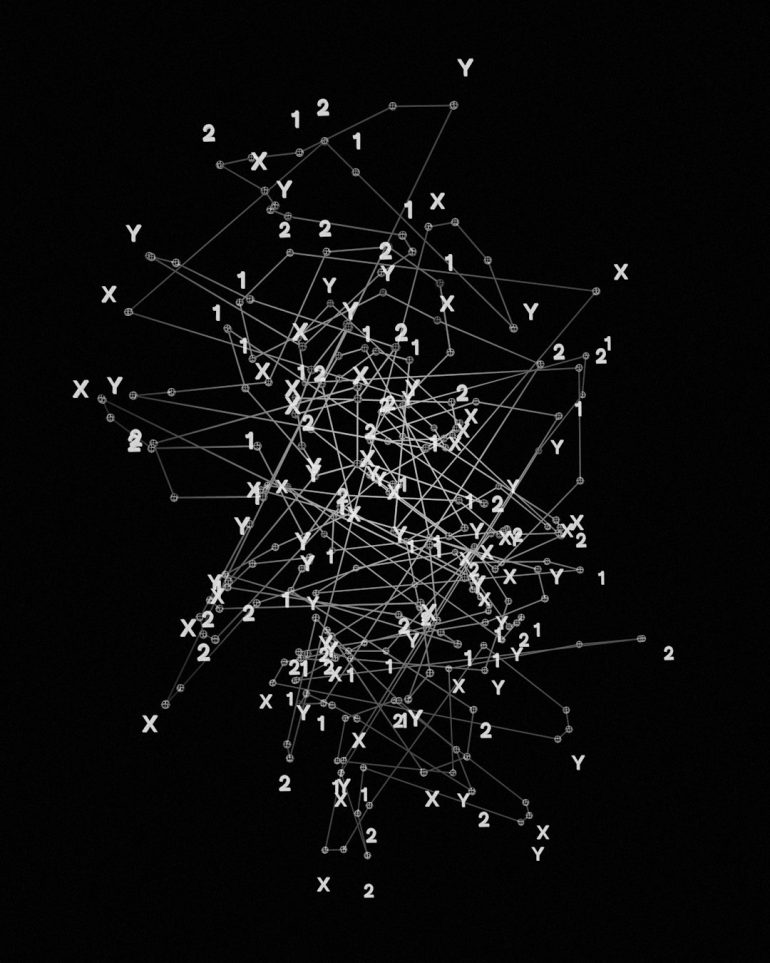

To fully grasp the gravity of this outage, it is important first to understand what Cloudflare does. Cloudflare operates a globally distributed network of data centers that help keep websites fast, secure, and always available. It provides:

- Content Delivery: Caching static assets near users to reduce latency.

- DDoS Protection: Absorbing large volumes of illegitimate traffic designed to overwhelm servers.

- DNS Services: Translating domain names into IP addresses.

- Edge Computing: Running code close to the user for real-time applications.

Given its ubiquity, even a temporary outage has magnified consequences. When Cloudflare falters, the internet itself stumbles.

The Events Leading Up to the Outage

According to Cloudflare’s official incident report, the outage originated during a routine rollout of network route optimizations. The company was implementing changes designed to improve the efficiency of BGP (Border Gateway Protocol) routing across its edge locations.

However, a misconfiguration in the route propagation logic caused the new BGP routes to be misadvertised globally. Consequently, traffic bound for Cloudflare-protected websites was rerouted incorrectly, leading to black holes in various routes and site inaccessibility for vast swaths of users.

Modern networks depend heavily on precise configuration and synchronization. Just one small deviation, particularly in routing protocols, can cascade into massive outages – and that’s exactly what happened here.

Immediate Impact on the Internet

The outage lasted approximately 92 minutes from initial misconfiguration to full resolution, but within that short span, the domino effects were monumental. Some of the most noticeable implications included:

- Accessibility: Over 20 percent of the world’s top 1000 websites went offline or became intermittently accessible.

- Services Disrupted: APIs, login pages, payment gateways, and cloud applications relying on Cloudflare saw errors or latency spikes.

- Economic Impact: E-commerce platforms estimated losses ranging from $15 million to $30 million in that 90-minute window.

Users in regions like North America, Europe, and Southeast Asia were hit the hardest. Popular platforms, including cryptocurrency exchanges, news sites, and even productivity tools, suffered from total or partial downtime.

Root Cause Analysis

Cloudflare’s engineering team acted with urgency, initiating a rollback of the faulty configuration and propagating corrected BGP announcements. Upon forensic investigation later, they identified the specific misstep: a parameter in the automated configuration tool responsible for weighting route preferences was incorrectly set.

Among the takeaways from this successful diagnostic effort were these key points:

- Automation Risk: The tool failed to detect or reject configuration errors before deployment.

- Layered Diagnostics: Internal radar systems failed to catch the issue early because it didn’t immediately breach thresholds typically associated with traffic disruptions.

- Propagation Lag: Global BGP convergence delays worsened the recovery timeline.

Once identified, engineers gradually removed the errant routes and initiated tiered reactivation of services to avoid overloading the restored infrastructure during recovery.

Public and Industry Response

The immediate aftermath saw a flurry of criticism directed not just at Cloudflare but at the state of centralized web infrastructure. Prominent voices in the industry questioned the wisdom of having so much of the web’s backbone reliant on a single provider, no matter how robust.

“The Cloudflare outage highlights a systemic vulnerability, not a one-off glitch,” said cybersecurity analyst Anya Rhodes in a televised panel discussion. “More than 30% of the Fortune 500 depend on a few cloud providers. We need to take diversification seriously.”

Technical communities reacted with mixed emotions. Some applauded Cloudflare’s rapid transparency and technical breakdown, while others criticized the oversight in failing to catch a preventable error, especially one rooted in automation logic.

Cloudflare’s Actions and Promises

Cloudflare didn’t take the criticism lightly. Within days, they issued a 3,000-word post mortem outlining both the technical failings and the roadmap for future prevention. From that document, several commitments emerged:

- Audit of Automation Tools: Ensuring human-in-the-loop processes for all edge changes going forward.

- Improved Monitoring: Deploying AI-powered predictive modeling to catch deviations earlier.

- Regional Isolation: Engineering the next-generation routing architecture to quarantine faults more effectively at the regional rather than global level.

- Visibility to Clients: Offering premium clients advanced alerts and better real-time diagnostics when service disruption is detected.

Cloudflare’s CEO, Matthew Prince, summarized the company’s position in a statement: “We recognize the trust our customers place in us. This outage was unacceptable by our own standards. Our engineering culture is rooted in learning, and we will learn from this.”

Lessons for the Internet Ecosystem

Perhaps the most important takeaway from the Flare of Denial is that no system, no matter how distributed or fault-tolerant, is immune to failure. As systems grow in complexity, so does the need for clarity and fail-safes at every step.

This incident catalyzes three essential truths:

- Redundancy is Not Just Hardware: Systems must be evaluated for architectural and provider-level redundancy to avoid single points of failure.

- Automation Needs Oversight: While automation accelerates progress, it also amplifies mistakes if not regularly audited and sandbox-tested.

- Communication Matters: Cloudflare’s timely and transparent communication helped de-escalate user frustrations and restored confidence.

Going forward, IT departments, CTOs, and infrastructure architects are likely to rethink their dependency levels. The mantra of “don’t put all your eggs in one cloud” may finally get the attention it deserves.

Conclusion

The Flare of Denial was not just a technical failure—it was a wake-up call for the digital age. As internet infrastructure becomes more intertwined with daily life and commerce, the risks associated with centralized control grow disproportionately. Cloudflare has made clear steps toward improvement, but the onus also lies on the broader community to evolve resilience strategies, distribute critical functions, and prepare for an increasingly complex web environment. Trust in the internet depends not just on extra lines of code, but on an ethos of collective responsibility, transparency, and continuous improvement.